Satellite Image Exchange

The Terminal Vision

2025

Methodology

From Horizontal Photographs to Vertical Satellite Imagery Network

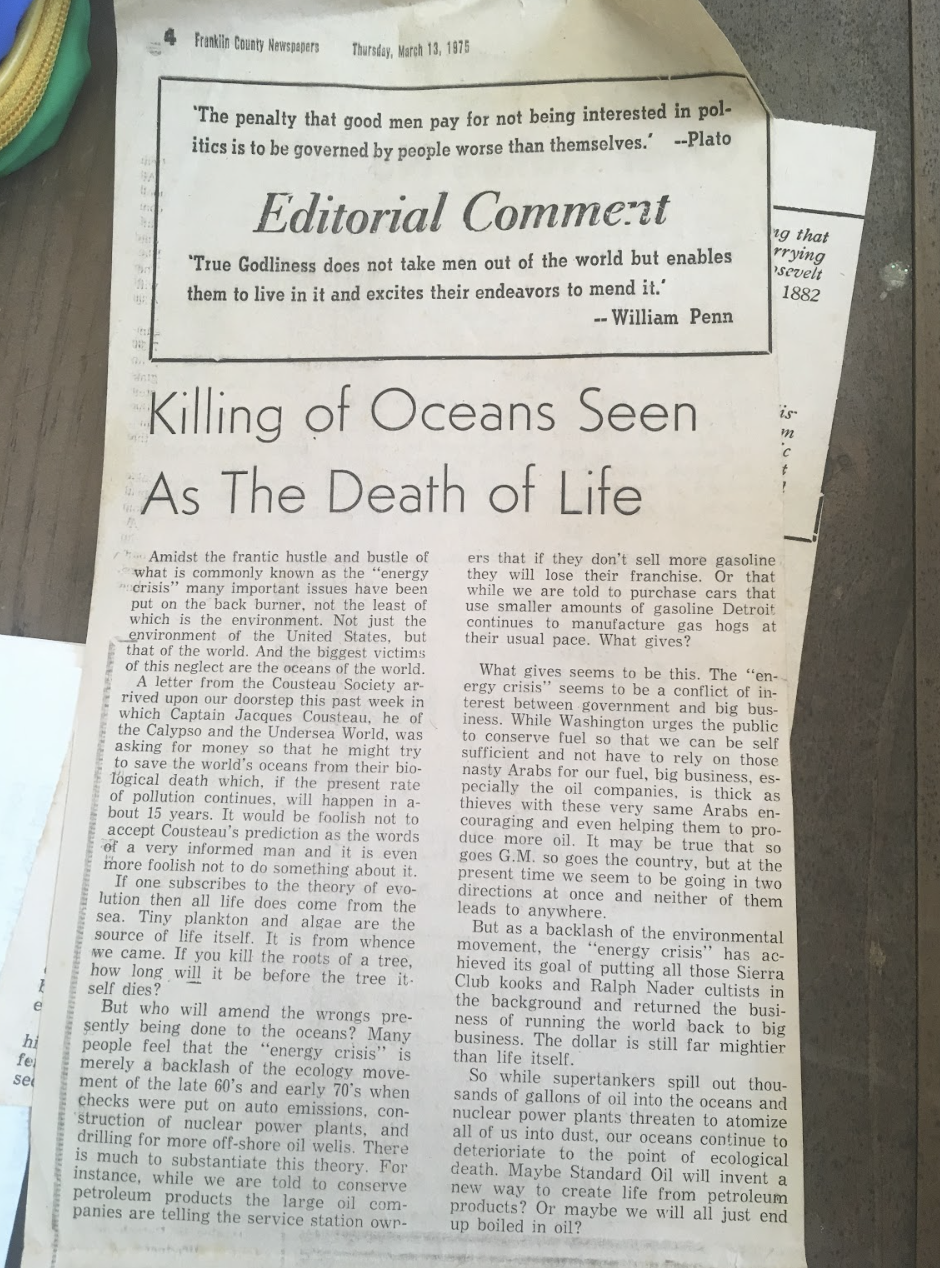

We usually share pieces of our visions from and of the world with our mobile devices via the internet, which are also horizontal visions emitting into the world. Each of our uploaded photographic image captures is a pixel that makes up how our world and planet looks horizontally on the micro scale, while the satellite image is the vertical vision of our world and planet on the macro scale. Each of our horizontal photographic captures is now to be exchanged for a vertical satellite vision. We upload and share photographs we have captured from our daily lives with each other, starting to weave an image network that links Shanghai and Montreal, streets in Southeast Asia and towns in Northeast America…

Image Metadata

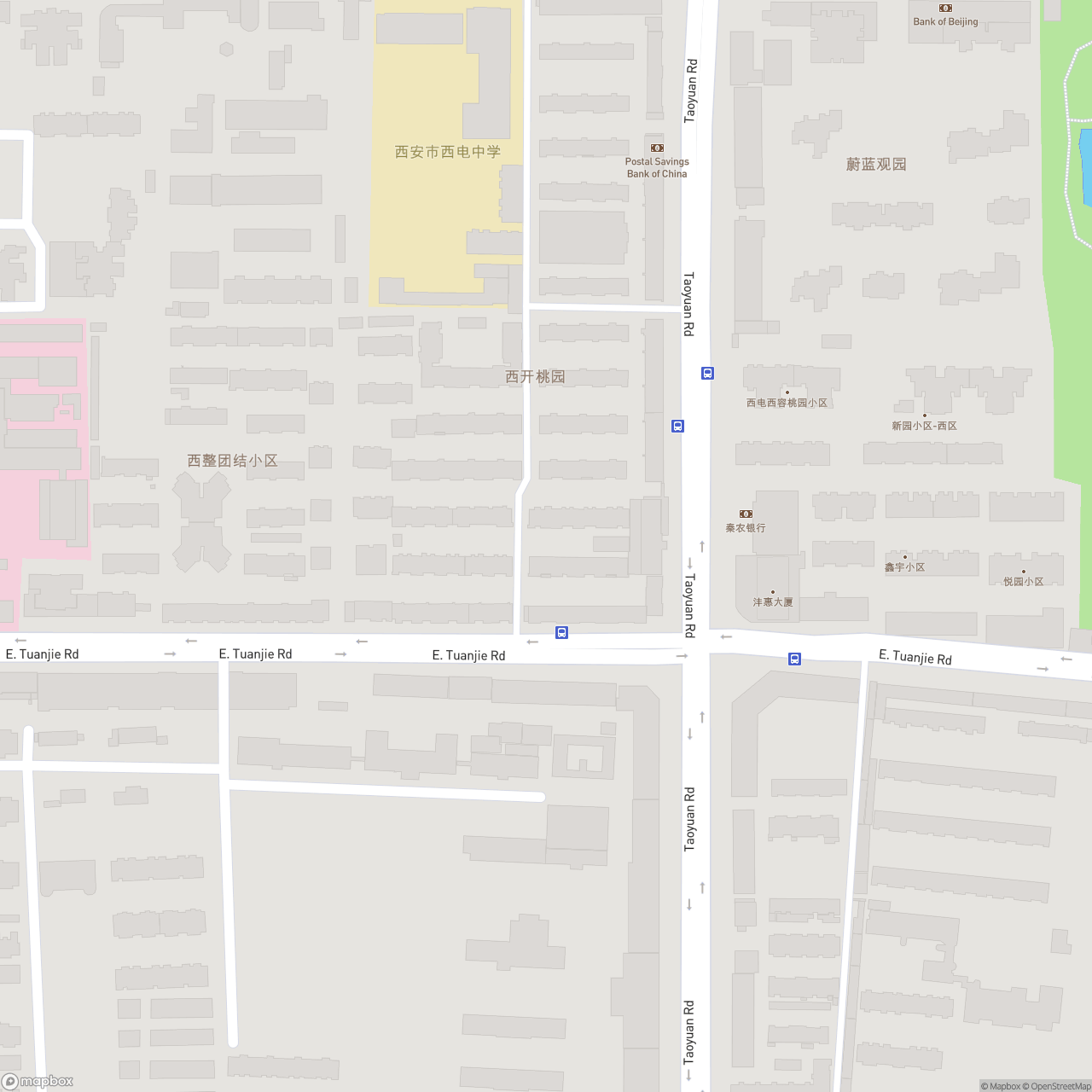

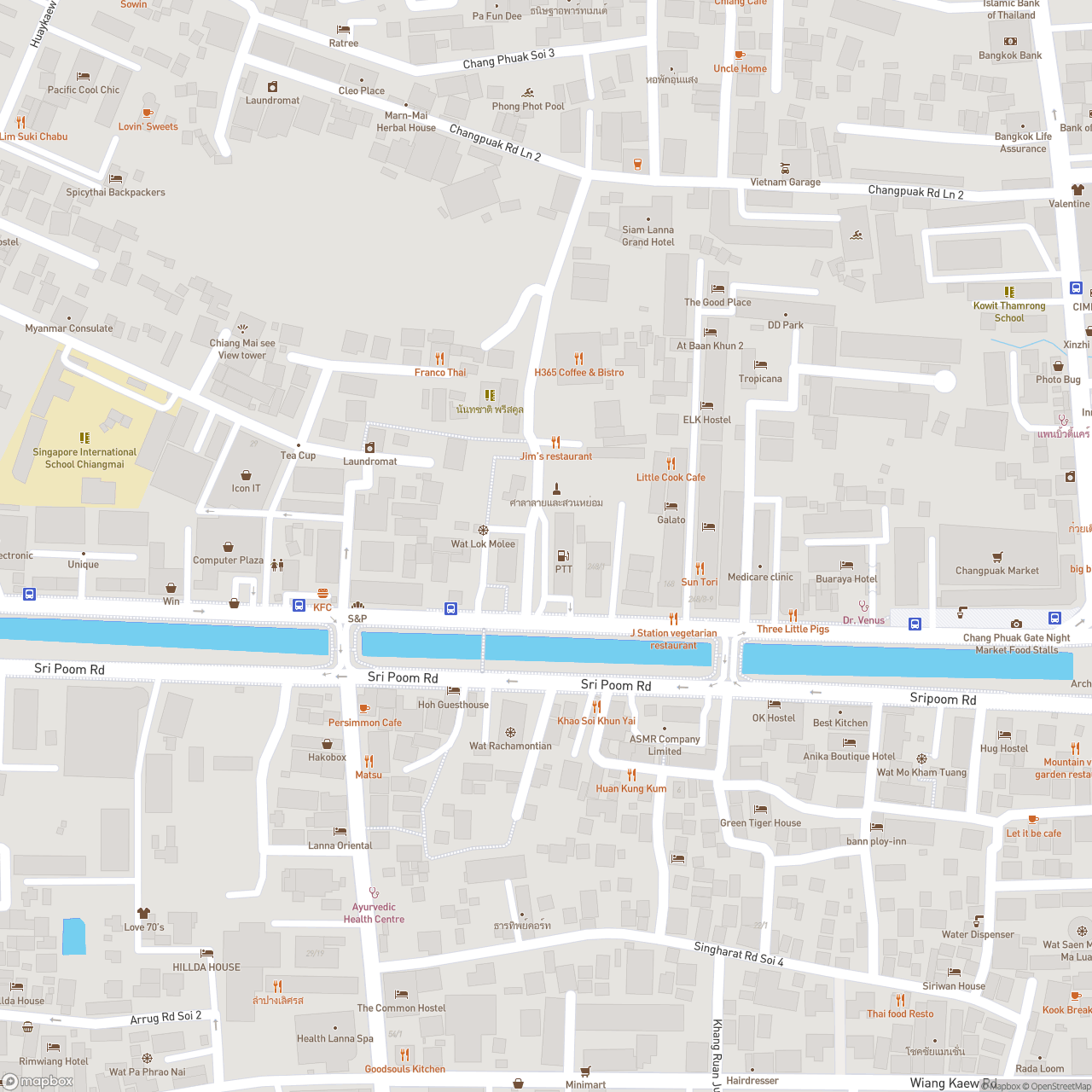

Whenever a computational photograph is captured by our mobile devices, the metadata of each image will instantly be recorded for possible image processing, analysis, tracking, training for AI models etc. The image metadata we use for this project include: the timecode and the geographic coordinates (longitude and latitude) of each photo at the moment it is captured. Both the geographic coordinates and timecode have been used to set marks as the gateway to communicate with the satellite. Instead of extracting the metadata, we’re recollecting and reorganizing the time and location of each photographic moment to weave a map of images of our own.

Coordinates: 31.389600, 121.522500

Location: Shanghai, China

Recorded: 2024-12-19 14:24 GMT+8

Map Tiles

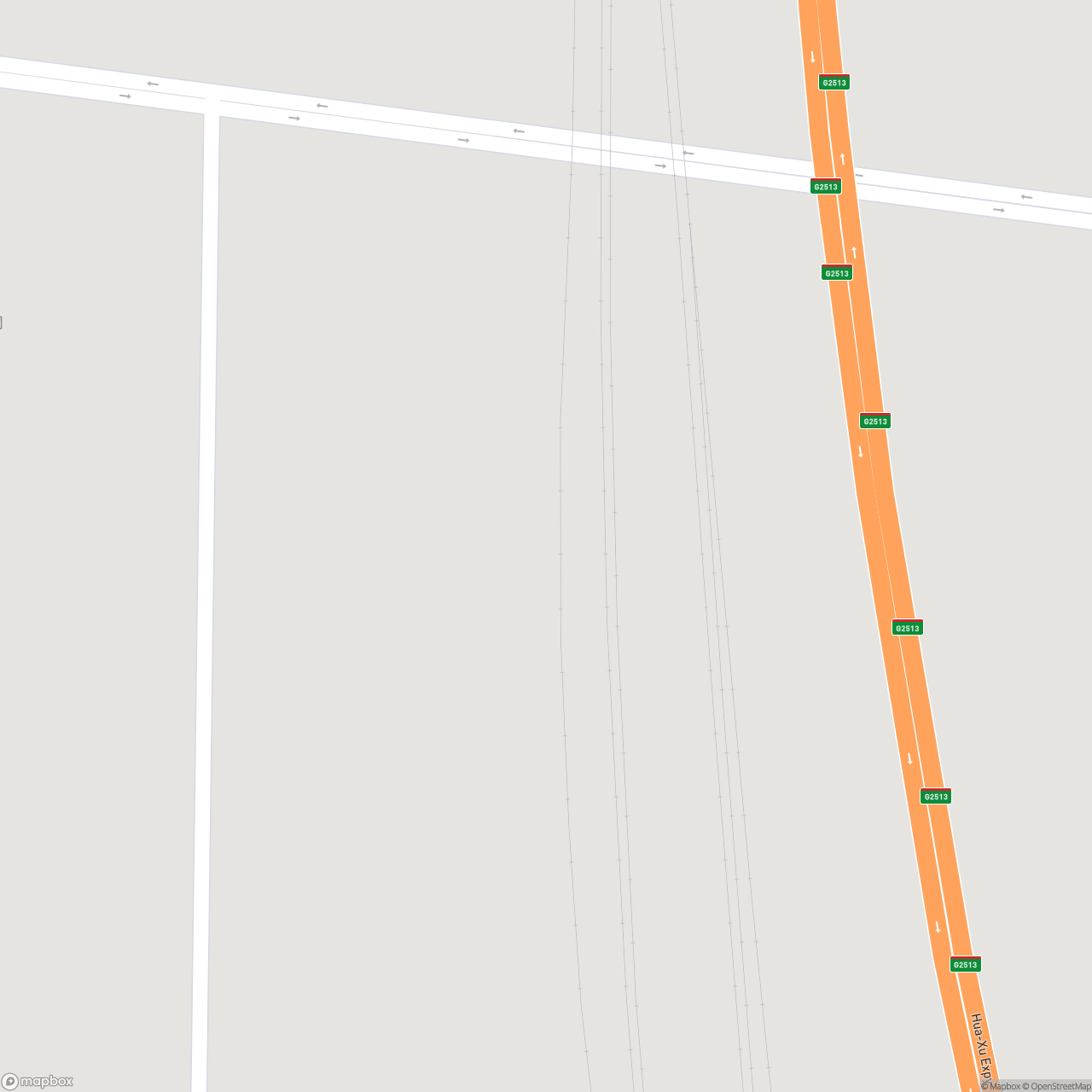

Metadata of each original photographic image (its geolocation coordinates and capturing time) was used to identify a map tile of the image’s location with OpenStreetMap [2]. The map is like a virtual portal linking the photographic record of the real world and a satellite projection of a speculative vision—it’s where the horizontal to vertical perspective-change happens. The gesture and perspective of looking at maps resembles the perspective of looking at Earth images monitored by the satellite. And it is not only about looking at, but the process of map-making, including the history of gridding, digging, post-holing, exploring, extracting, manipulating, abusing, overtaking, and controlling, unfolds similar desires in the identifying, classifying, tracking processes when monitoring and surveilling with satellite images. Each map tile provides a reference terrain of the original landscape of each situated photographer’s vision, which will be used to layer the AI-generated satellite imagery with.

Labeling

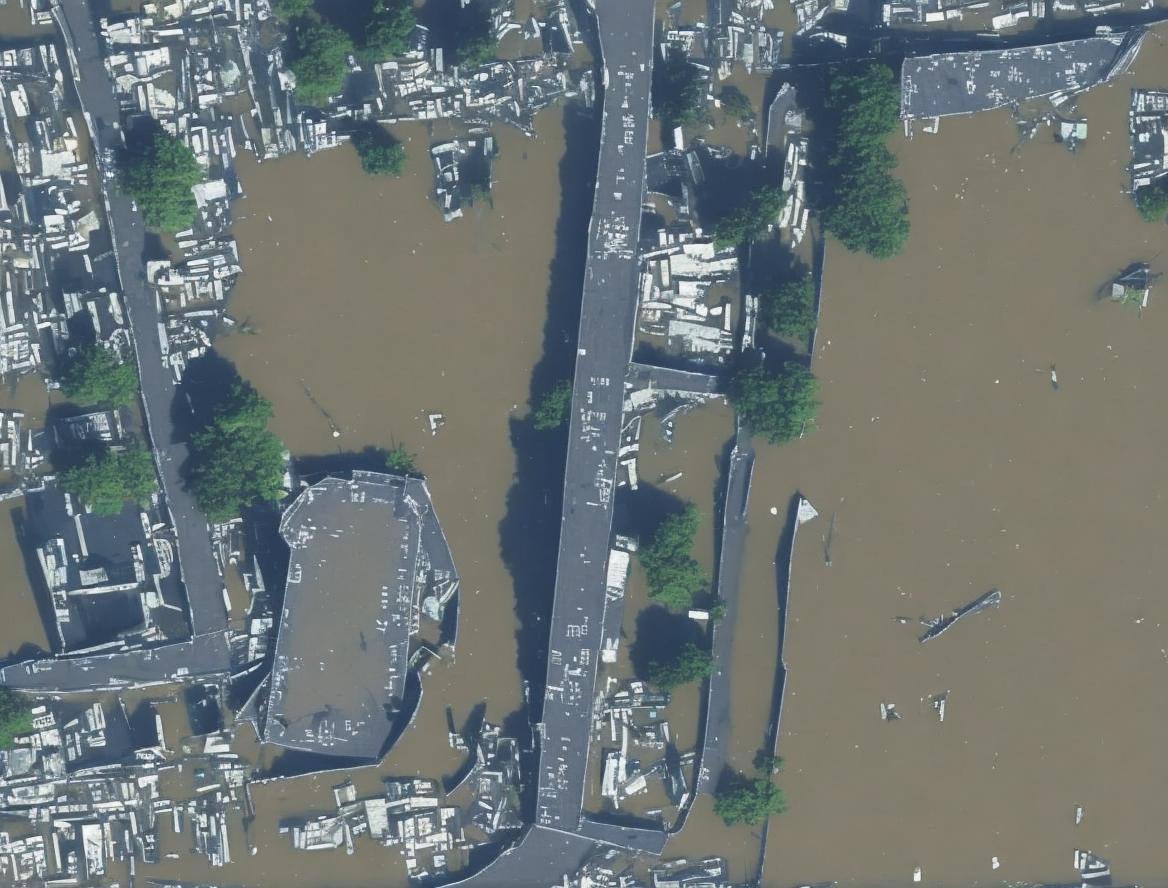

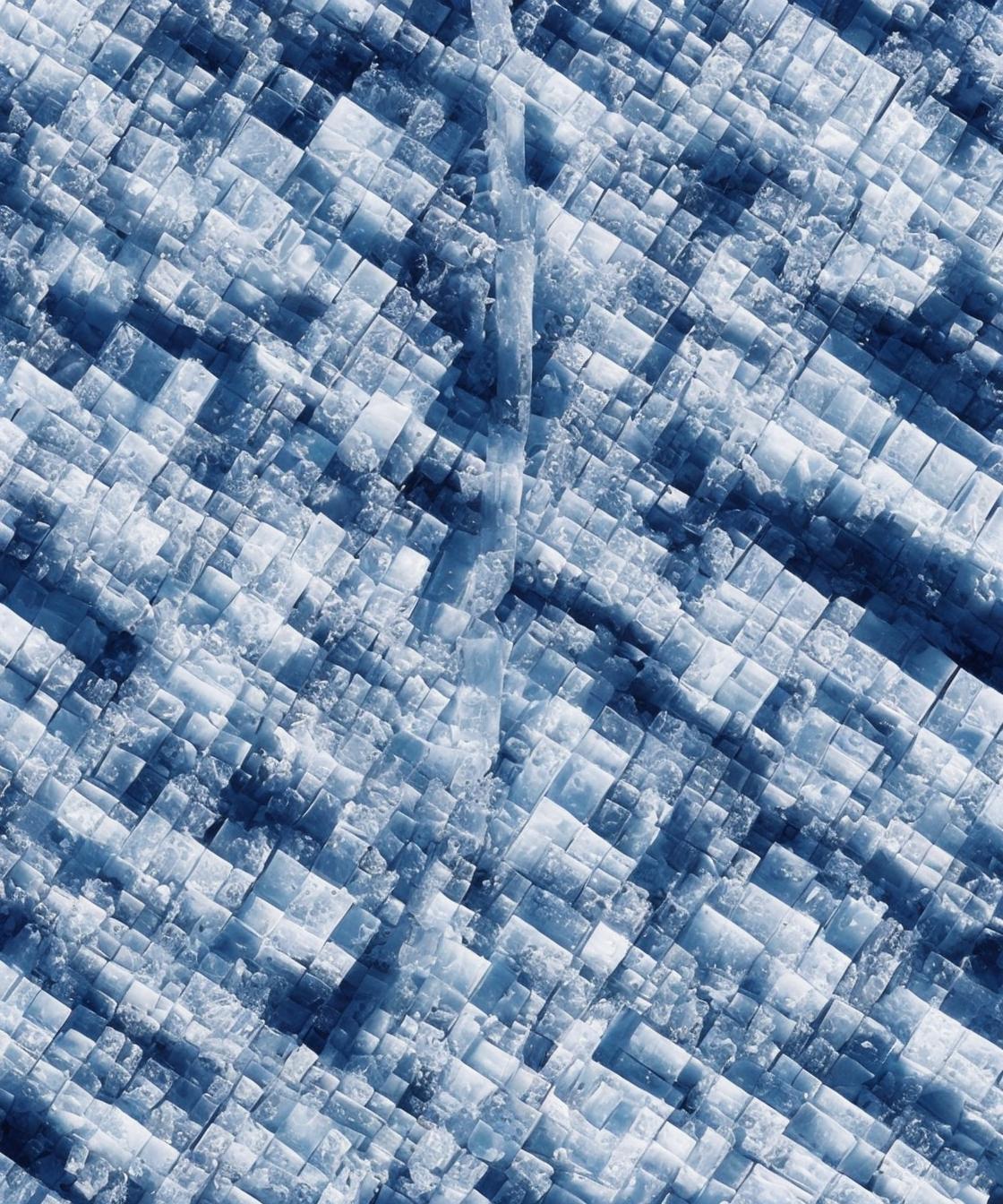

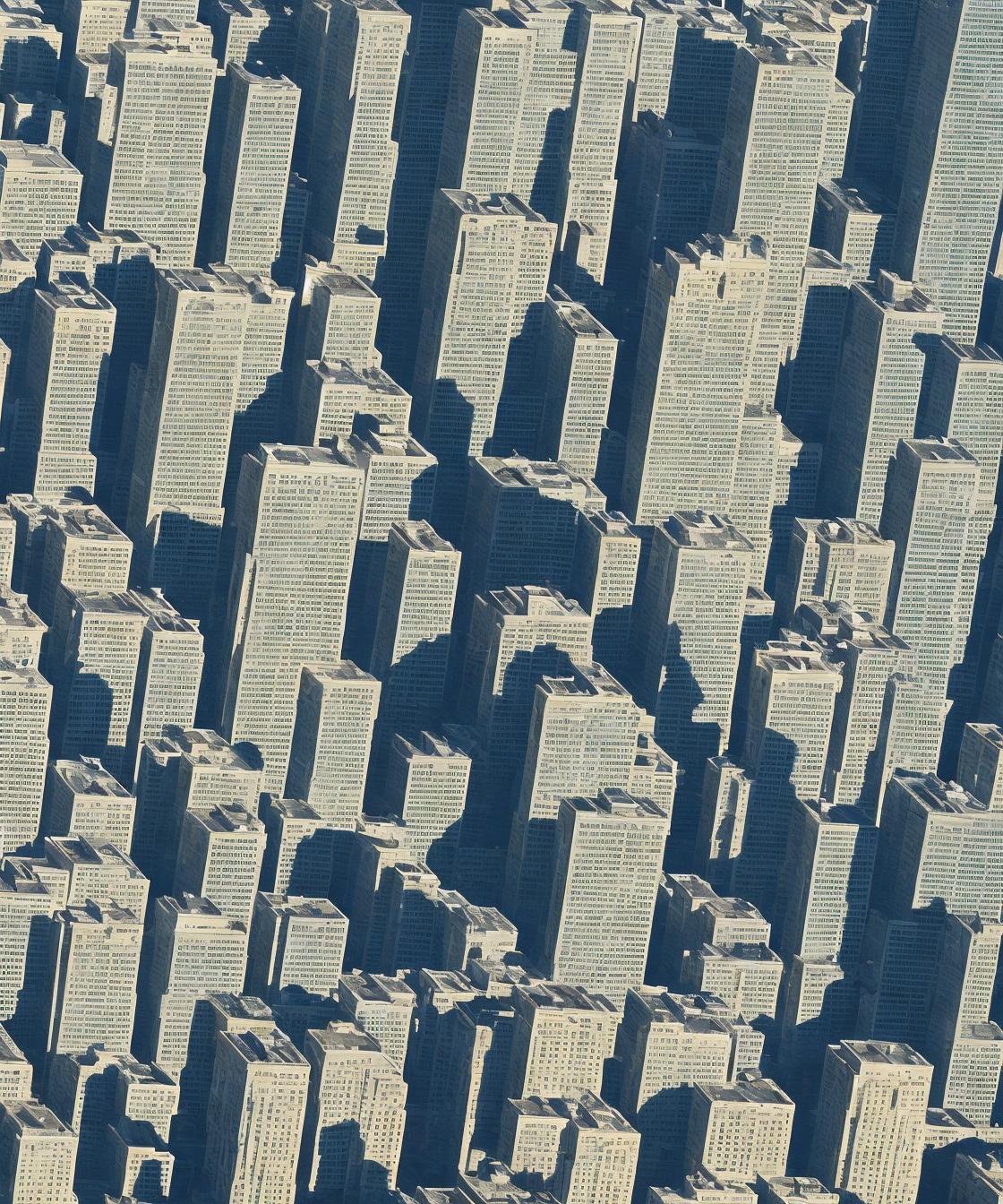

Labeling therefore has been crucial in identifying landscapes and targeting objects in processing satellite imagery. We labeled each image with our self-defined classification labels (in response to the labeling process of satellite imagery used by monitoring institutions), such as Heavy Machinery Ocean Resort Capitalism,” “Ice City Depression,” “Religious City Corporation,” and “Lonely Single House Post Oceans” in these cases below. We’re self-defining “classifications” and “labels” to each photographic image in order to reverse generate the landscape we wish to see. As we describe and label each image we’ve taken, we’re redefining what the seemingly “neutral” camera machine has captured, infused now with our own emotional and subjective speculations. It’s a process of interpreting, pausing, and reinterpreting.

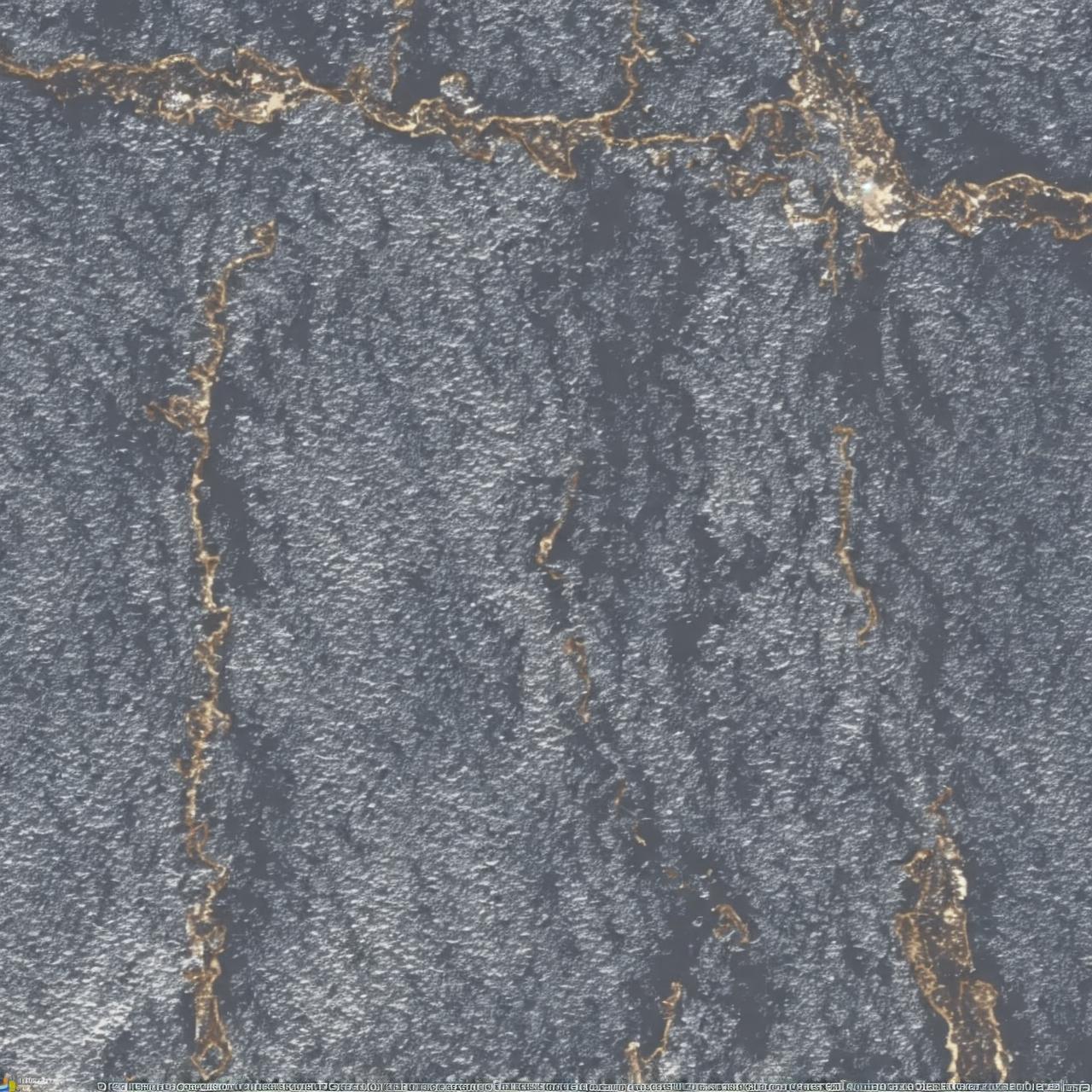

AI Imagery Generation

With the labels, we created AI-generated satellite images with an open-source model called GeoSynth [3], to replace each respective map tile and geographic region with the context and visions seen from each original photograph. GeoSynth is a suite of ControlNet adapters fine-tuned on Stable Diffusion, enabling the generation of high-resolution satellite images conditioned on OpenStreetMap map tiles. It offers us a great tool and thinking model to reverse construct satellite images based on fictional labels and terrain spatial layout references. Working with GeoSynth’s database also leads us to access preexisting categories of what “satellite images” have been about, such as “city,” “factory,” “military camp,” “farmland” (sample reference prompts from GeoSynth). We’re now able to reconstruct an uncannily realistic landscape, with slight touches of the imaginative and fantasized views, as if it is really seen from the satellite’s eyes.

One image from the variations of the satellite image was selected to generate an expanded video clip using Runway Gen-4. Each satellite video hovers and pans across the Earth.

The decay and change we see in the real landscape are also mediations of planetary computation haunted by our desire, memory, fear, and power, generated in emotion and contemplation. Evolutions of both vertical image-surveilling and horizontal image-capturing are mediating into more complex satellite visions which we are all entangled in. In The Terminal Vision, we’re displaying our concern and calling for the climate, about the capitalistic extractions, evolving consequences of human conflicts, inevitably advancement of technological acceleration…

- [2] OpenStreetMap: https://www.openstreetmap.org

- [3] S. Sastry, S. Khanal, A. Dhakal, and N. Jacobs, “GeoSynth: Contextually-Aware High-Resolution Satellite Image Synthesis,” in Proc. IEEE/ISPRS Workshop: Large Scale Computer Vision for Remote Sensing (EARTHVISION), 2024.

© hua xi zi & patrick o’shea